AI researchers help robots see underwater – technology that could save lives

AI researchers Kavyaa Somasundaram and Martin Längkvist at Örebro University, along with software developer Martin Karlsson at Piktiv AB, gathering data in collaboration with Saab and Nerikes Brandkår. They are testing an underwater robot to gain a better understanding of how sonar images appear and should be interpreted.

By combining camera and sonar data, AI researchers at Örebro University have improved the visual capabilities of underwater robots. This enables Saab vehicle operators to perform their missions more efficiently, and for Nerikes Brandkår, it could be the difference between life and death. “This improves our chances of saving lives,” says Mats Hallgren at Nerikes Brandkår.

Submersibles can be utilised by various operators for a range of purposes, such as inspecting infrastructure like oil pipelines or searching for people lost underwater.

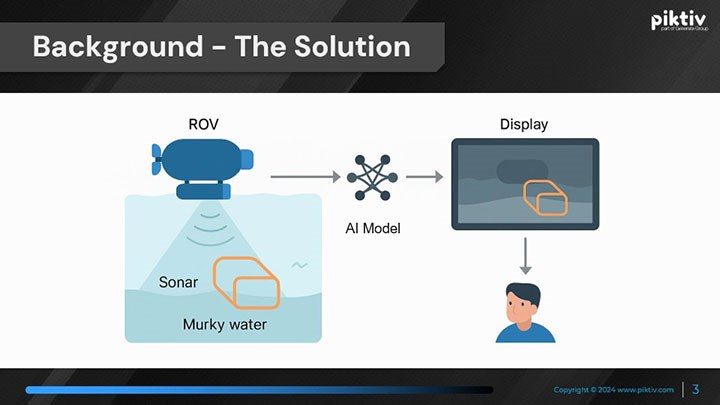

One problem for submersibles – underwater robot vehicles – is visibility in murky and dark water. To address this issue, Örebro University, through the regional development project AI.ALL, launched the Saight pilot project in collaboration with Saab, Nerikes Brandkår, and software developer Piktiv AB.

“Important to be involved in innovative developments”

“We manufacture submersibles that are often remotely controlled by an operator. We are working to make it easier for the operator when there is murky water. In these environments, camera visibility is very poor, and operators struggle to fulfil their tasks efficiently. The proposal is to use AI and augmented reality to combine a sonar sensor, which can see through murky water, with the existing camera,” says Gert Johansson at Saab.

“For us at Nerikes Brandkår, it’s important to be involved in innovative developments. This technology has the potential to simplify our everyday tasks in the future. We’ve encountered significant difficulties in locating individuals who’ve gone missing beneath the water’s surface. It’s not easy learning sonar and echo sounder technology. Here, we’re implementing AI to assist us as operators in interpreting the images. This enables us to locate a person at an earlier stage and shorten the time they’re under the surface,” says Mats Hallgren at Nerikes Brandkår.

The role of the software developer Piktiv AB in the project has been to design a simulation, similar to a computer game, that projects images from sonar and echo sounders, enabling various scenarios to be played out for training purposes.

“The greatest challenge for us has been working with AI models and getting them to perform well, which requires a lot of experimentation. It’s been a lot of fun working with Örebro University, Saab, and Nerikes Brandkår. It’s impressive to see the range of expertise in this project,” says Martin Karlsson at Piktiv.

Drilled ice holes to test drive underwater robot vehicles

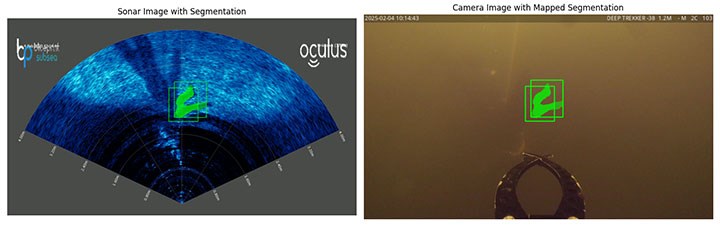

Kavyaa Somasundaram is the lead researcher in the Saight project. She is an industrial doctoral student at Örebro University, affiliated with Saab AB. She has developed a specialised AI algorithm to analyse data from the camera and sonar installed on the underwater robot, predicting where and what objects are in the environment around the robot – objects that would otherwise be difficult to detect with the human eye.

“One challenge was interpreting the sonar data. It’s garbled and contains a lot of noise. Sometimes, you have no idea what you’re even looking at. After a knowledge transfer with Saab and emergency services personnel, we’re able to understand better what we’re annotating in the data and what we want the AI model to learn,” says Kavyaa Somasundaram, and continues:

“You need to collect a lot of data and be on location to understand what you’re actually seeing. One bitterly cold day, we drilled a hole in the ice on a lake and deployed the robot to observe what a shadow appears like on the sonar image. This was an invaluable lesson for me.”

“With the help of this knowledge transfer, expertise in computer science, and newly collected data, Kavyaa Somasundaram and Örebro University were able to contribute to the scientific process of developing a new technological approach that has never previously been published or available for application,” says Martin Längkvist, technical project manager for Saight and senior lecturer in computer science at Örebro University.

He continues, “A major advantage of the method is that it’s easy to use. You don’t need to be an expert in interpreting sonar data or have prior knowledge of how AI technology works. It can operate in both clear and murky water without requiring the user to make any manual adjustments if visibility deteriorates while operating the submersible, which is a very common occurrence. In clear water, the algorithm automatically relies more on the camera, while in murky water it uses the data from the sonar more in its analysis.”

According to Martin Längkvist, there is interest in further developing the method.

“Future development will involve training the AI algorithm on both more collected real data and simulated data. The challenge here is either to make the simulated data resemble real data or to further develop the AI model so that it cannot distinguish between real and simulated data,” says Martin Längkvist.

“Improving the odds of saving lives”

Kavyaa Somasundaram highlights that the outcomes of these projects could significantly benefit numerous areas of society.

“I think there’s huge potential for this method. We’ve now shown how these algorithms can be used in underwater environments. When you think about it, the models can be applied in many other areas, such as autonomous vehicles or in mines, where similar problems arise, for instance, in foggy conditions with low visibility. If we can adapt the models to different application areas, I believe we could achieve very good results,” says Kavyaa Somasundaram.

Mats Hallgren from Nerikes Brandkår agrees and strongly believes that the AI algorithm can help save lives.

“When we’re in action, time is always part of the equation. The quicker we can find and rescue a person from the water, the better our chances of saving their life. And with this solution, it’s faster.”

Saight is a collaboration between Örebro University, Saab, Nerikes Brandkår and Piktiv AB. The project, which is part of the AI, Robotics and Cybersecurity Centre (ARC), is supported by the regional development project AI.ALL and co-funded by Region Örebro County and the European Union.

![]()

Text: Jesper Eriksson

Photo: Linda Harradine, Jesper Eriksson and private

Translation: Jerry Gray