SEK 4 million for research on more human-centred artificial intelligence

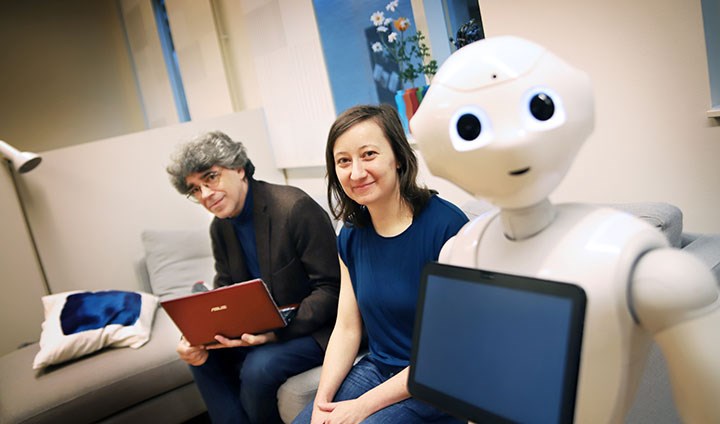

Professor Alessandro Saffiotti, AI researcher Jasmin Grosinger and Pepper, a semi-humanoid robot.

Working together with robots and AI systems in the future will need to be more proactive and human-centred. This is the goal of Alessandro Saffiotti’s, Jasmin Grosinger’s and Federico Pecora’s research project, which has received SEK 4 million in funding from the Swedish Research Council.

“We’re creating a model for how and when a robot should act,” says Alessandro Saffiotti.

Artificial intelligence with human-centred proactivity is the goal of a new research project led by Professor Alessandro Saffiotti and AI researchers Jasmin Grosinger and Federico Pecora. Their project has received SEK 4.04 million in funding from the Swedish Research Council.

But what does human-centred proactivity actually mean?

The Örebro researchers offer the following metaphor:

Imagine these two colleagues at work, Mr I-don’t-get-it and Ms Insensitivity. Although both are good at what they do, still they have their shortcomings. For example, Mr I-don’t-get-it always needs to be told precisely what to do, never acts on his own initiative, and struggles with longer-term planning. As for Ms Insensitivity, she likes telling everyone what to do, but she cannot explain her behaviour if she is held accountable.

It may sound like these colleagues might be impossible to work with, but this is precisely how artificial intelligence systems work today.

AI needs to be able to take initiative

In a well-functioning workplace, colleagues understand the bigger picture, act on their own initiative, and realise the consequences of their actions. In other words, they are proactive. Not only do they know their own preferences, but they can understand those of others. They can apply this understanding when interpreting orders and instructions instead of taking them literally. In addition, colleagues should not impose their will on others but be accommodating and able to explain their decisions. These characteristics are summed up in the concept of “human-centred”.

The challenge Örebro researchers are facing is making AI systems and robots more human-centred and proactive.

“We’re creating a model for human-centred proactivity and developing reasoning algorithms that use this model to deduce how and when an AI system or robot should act,” says Alessandro Saffiotti.

“To achieve the project’s goals, we’ll develop methods and theories that are universal. This is an important aspect as it enables us to develop an open-ended solution to be used by more than one system. That way, our model can be used, for example, in an assistance robot that can pick up medicine from the pharmacy or do the shopping when needed, without being asked. But it can also be used in an industrial robot on an assembly line, handing tools to its human co-worker at the right time, again without being told,” explains Jasmin Grosinger.

AI testing soon in a smart home environment

The researchers plan to assess their model in a variety of applications and environments. Testing will first take place in a post-earthquake city simulation. Next, plans call for implementation in a smart home environment with existing assistance robots.

“In our experiments, we’ll evaluate whether our proactive system leads to desirable end results – and whether these are human-centred,” concludes Alessandro Saffiotti.

Text and photo: Jesper Eriksson

Translation: Jerry Gray